Training a PyTorch neural network on cell-centered OpenFOAM fields

This post covers the use of the PyTorch C++ API for approximating cell-centered fields (pressure, temperature, density, etc.) in OpenFOAM using a Neural Network (NN). The training is done in an OpenFOAM application, the code can be generalized and ported into a library as described in this post. The unstructured Finite Volume method in OpenFOAM averages values at cell centers to ensure second-order accuracy on unstructured meshes. This defines the NN input as $\mathbf{x} \in \mathbb{R}^3$, a 3D vector (cell center), and the field value as the output. Although OpenFOAM works with tensors, this example only covers scalar fields, I’ll cover the generalization for tensors in another post.

The working application aiFoamLearn from the post gitlab.com/tmaric/openfoam-ml.

Disclaimer

This offering is not approved or endorsed by OpenCFD Limited, producer and distributor of the OpenFOAM software via www.openfoam.com, and owner of the OPENFOAM® and OpenCFD® trade marks.

PyTorch in an OpenFOAM application

Note that this post is written on a Linux system, using a fixed path to the PyTorch library, may differ across platforms.

Linking with PyTorch

You can generate a template OpenFOAM application using

?> foamNewApp aiFoamLearn

This generates the aiFoamLearn directory with the “.C” source code file and the Make build folder

?> ls aiFoamLearn/

Make aiFoamLearn.C

?> ls aiFoamLearn/Make/

files options

The build needs to include and link to the PyTorch C++ library. The files usually contain the list of source files to be compiled, in this case, aiFoamLearn.C, and it needs to be changed to make sure the binaries are not stored in the OpenFOAM system path that may require root rights

aiFoamLearn.C

EXE = $(FOAM_USER_APPBIN)/aiFoamLearn

We also have to edit Make/options to include PyTorch:

EXE_INC = \

-Wno-old-style-cast -Wno-redundant-move \

-I/usr/include/torch/csrc/api/include \

-I../../../src/mlSurface/lnInclude \

-I$(LIB_SRC)/finiteVolume/lnInclude \

-I$(LIB_SRC)/meshTools/lnInclude

EXE_LIBS = \

-L/usr/lib \

-ltorch \

-lfiniteVolume \

-lmeshTools

OpenFOAM (tag OpenFOAM-v2006) builds per default with the -std=c++11 option (using C++11 standard), to build OpenFOAM applications with PyTorch, the proper thing to do is to make sure that the option -std=c++2a is used when OpenFOAM is built, by modifying

$WM_PROJECT_DIR/wmake/rules/General/Gcc/c++

so that it contains

CC = g++ -std=c++2a

and re-building OpenFOAM. The improper thing to do is to append -std=c++2a to your Make/options file like this

EXE_INC = \

-std=c++2a

-Wno-old-style-cast -Wno-redundant-move \

-I/usr/include/torch/csrc/api/include \

...

because then you’ll be linking OpenFOAM libraries in your application that were compiled with the C++ 11 standard, with PyTorch and your application that are compiled with the C++17 standard. This may lead to undefined behavior and should be avoided.

Including headers

Include the PyTorch headers first, before including OpenFOAM headers

// Machine Learning with PyTorch

#include <torch/torch.h>

// OpenFOAM stuff

#include "fvCFD.H"

OpenFOAM and PyTorch assignment

At some point the NN will need $\mathbf{x} \in \mathbb{R}^3$ as input, i.e. cell centers from the OpenFOAM unstructured mesh. The thing is, there seems to be no templated operator in torch::Tensor along the lines of

template<typename SomeFlatArray>

torch::Tensor& torch::Tensor::operator=(

torch::Tensor& t,

SomeFlatArray const& v

)

{

// Somehow flatten t first

auto t_flattened = t.flatten();

for (decltype(t_flattened.size()) i = 0; i < t_flattened.size(); ++i)

t_flattened[i] = v[i];

return t;

}

or something like that. So that is why

torch::Tensor t = torch::zeros(3);

Foam::vector v(0,0,0);

t = v;

fails with a compilation error that states that an assingment operator to a Torch::tensor from an OpenFOAM vector is not available. To assign cell centers to a Torch::Tensor, this function can be used in OpenFOAM code, I placed this above the main function in this example OpenFOAM application

void assign(

torch::Tensor&& ttensor,

const Foam::vector& vec

)

{

ttensor[0] = vec[0];

ttensor[1] = vec[1];

ttensor[2] = vec[2];

}

Application options

I’ve written about adding options to OpenFOAM applications in this post, so I’ll just list them here shortly. We’ll need to know which field to train the NN on, as well as the shape of the NN to use (the hiddenLayers vector), the tolerance of the optimizer and the maximal number of iterations

argList::addOption

(

"field",

"<string>",

"Name of the volume (cell-centered) field approximated by the NN."

);

argList::addOption

(

"hiddenLayers",

"<int>",

"Number of layers in the neural network."

);

argList::addOption

(

"optimizerStep",

"<double>",

"Step of the optimizer."

);

argList::addOption

(

"epsilon",

"<double>",

"Training error tolerance."

);

argList::addOption

(

"maxIterations",

"<int>",

"Max number of iterations."

);

Application initialization

The application options are processed in setRootCase.H that handles application arguments. Setting the path to the simulation case, initializing the simulation time and the unstructured mesh are done as follows

#include "setRootCase.H"

#include "createTime.H"

// Train the last available time step.

instantList timeDirs = timeSelector::select0(runTime, args);

runTime.setTime(timeDirs[timeDirs.size() - 1], timeDirs.size() - 1);

#include "createMesh.H"

The application trains the NN on the field from the last time step of the simulation, so the timeDirs directory list is used select the last time step directory. Now we can check for the application options starting with the field name

if (!args.found("field"))

FatalErrorInFunction

<< "Missing name of the approximated field using.\n"

<< "Use -help for more info."

<< abort(FatalError);

const word fieldName = args.get<word>("field");

and initialize the fields

#include "createFields.H"

The createFields.H is the boilerplate header present in every OpenFOAM application (solver, utility, etc.). Here we are only working on cell-centered scalar fields, but the way OpenFOAM handles dimensions makes the field initialization relevant:

volScalarField vf

(

IOobject

(

fieldName,

runTime.timeName(),

mesh,

IOobject::MUST_READ,

IOobject::AUTO_WRITE

),

mesh

);

volScalarField vf_nn (vf.name() + "_nn", vf);

The vf_nn is the cell-centered field that will be used to evaluate the performance of the NN. It must have the same dimensions, and the same boundary conditions as the input field vf, so it’s initialized only with a different name. Next, the structure of the NN is read into a dynamic list of type DynamicList<label>, to make sure we can initialize NNs of different shapes and sizes

if (!args.found("hiddenLayers"))

FatalErrorInFunction

<< "Missing shape vector of the neural network.\n"

<< "Use -help for more info."

<< abort(FatalError);

// Read the hidden layer structure vector.

DynamicList<label> hiddenLayers(

args.get<DynamicList<label>>("hiddenLayers")

);

Using args.get<Type> and IStream construction are covered in another post.

Constructing the neural network

The input layer has three neurons, because we are using cell-center vectors $\mathbf{x} \in \mathbb{R^3}$ as arguments of the NN,

// Construct the neural network nn with a user-defined structure.

auto nn = torch::nn::Sequential();

// - Input layer are the 3 spatial coordinates in 3D.

nn->push_back(torch::nn::Linear(3, hiddenLayers[0]));

torch::nn::ReLU();

This works also with 2D OpenFOAM data, because OpenFOAM uses single cell-layer pseudo-2D unstructured meshes. Next, the hidden layers are constructed

// - Hidden layers

for (label L=1; L < hiddenLayers.size(); ++L)

{

nn->push_back(

torch::nn::Linear(hiddenLayers[L-1], hiddenLayers[L])

);

nn->push_back(torch::nn::ReLU());

}

and finally the 1D output layer

// - Output is 1D: value of the learned field.

nn->push_back(

torch::nn::Linear(hiddenLayers[hiddenLayers.size() - 1],1)

);

Preparing input data

The nn is ready for learning, but first, input data need to be initialized. PyTorch is vectorized, allows offloading to graphics processors, and similar, so OpenFOAM cell-centers and field values must be transfered to PyTorch tensor data.

// Prepare the training data: cell centers and values.

torch::Tensor points = torch::zeros(3 * mesh.nCells());

points = points.reshape({mesh.nCells(),3});

torch::Tensor values = torch::zeros(mesh.nCells());

values = values.reshape({mesh.nCells(),1});

const auto& cell_centers = mesh.C();

// - Foam::vector != torch::Tensor so assignment is required.

// - torch::Tensor::operator=(torch::Tensor&&, Foam::vector&&)

// would require the extension of torch::Tensor and this does

// the job.

forAll(cell_centers, cellI)

{

assign(points[cellI], cell_centers[cellI]);

values[cellI] = vf[cellI];

}

The need for reshape is described in another post. Here we use the assign function describe above, since we are training scalar (double) fields, special assignment is not necessary for the values. The requirement to transfer field values to PyTorch data structures causes a memory overhead, but the overhead in wall clock time is negligible, compared to the time it takes to actually train the NN.

Training the NN on an OpenFOAM field

The Adam optimizer is used with a user-defined optimizer step

// - Minimize the MLSE error at stencil points.

double optimizerStep =

args.getOrDefault<scalar>("optimizerStep", 0.05);

torch::optim::Adam optimizer(

nn->parameters(),

optimizerStep

);

initialize the training prediction and loss value tensors

torch::Tensor training_prediction =

torch::zeros_like(values);

torch::Tensor loss_values =

torch::zeros_like(values);

then enter the training loop

double epsilon =

args.getOrDefault<scalar>("epsilon", 1e-03);

double maxIterations =

args.getOrDefault<label>("maxIterations", 500);

size_t epoch = 1;

double max_mse = 0;

for (; epoch <= maxIterations; ++epoch)

{

optimizer.zero_grad();

training_prediction = nn->forward(points);

loss_values =

torch::mse_loss(training_prediction, values);

loss_values.backward();

optimizer.step();

max_mse = loss_values.max().item<double>();

std::cout << "Epoch = " << epoch

<< "\n max (Mean Square Error) = "

<< max_mse << std::endl;

if (max_mse < epsilon)

break;

}

Usually, one would split the training set into training and validation sets, I skipped this step here because the post is covering the APIs, and not aiming at high accuracy.

Error evaluation

The error evaluation is relatively straightforward: we evaluate the NN at every cell center and store the NN values into the vf_nn field, initialized in createFields.H:

// Evaluate cell-centered errors.

forAll(cell_centers, cellI)

{

vf_nn[cellI] = nn->forward(points[cellI]).item<double>();

}

// Correct boundary conditions using new cell-centered values.

vf_nn.correctBoundaryConditions();

The boundary values are not used in this example, so they have to be updated using the learned cell-centered values. Different error norms are reported, starting with $L_\infty$, normalized $L_\infty$, and the mean and normalized mean error.

error_c = Foam::mag(vf - vf_nn);

scalar vfMaxMag = Foam::mag(Foam::max(vf)).value();

scalar error_c_l_inf = Foam::max(error_c).value();

scalar error_c_l_inf_rel = error_c_l_inf / vfMaxMag;

scalar error_c_mean_rel = Foam::average(error_c).value() / vfMaxMag;

vf_nn.write();

error_c.write();

Info << "max(|field - field_nn|) = " << error_c_l_inf << endl;

Info << "max(|field - field_nn|)/|max(field)| = "

<< error_c_l_inf_rel << endl;

Info << "mean(|field - field_nn|)/|max(field)| = "

<< error_c_mean_rel << endl;

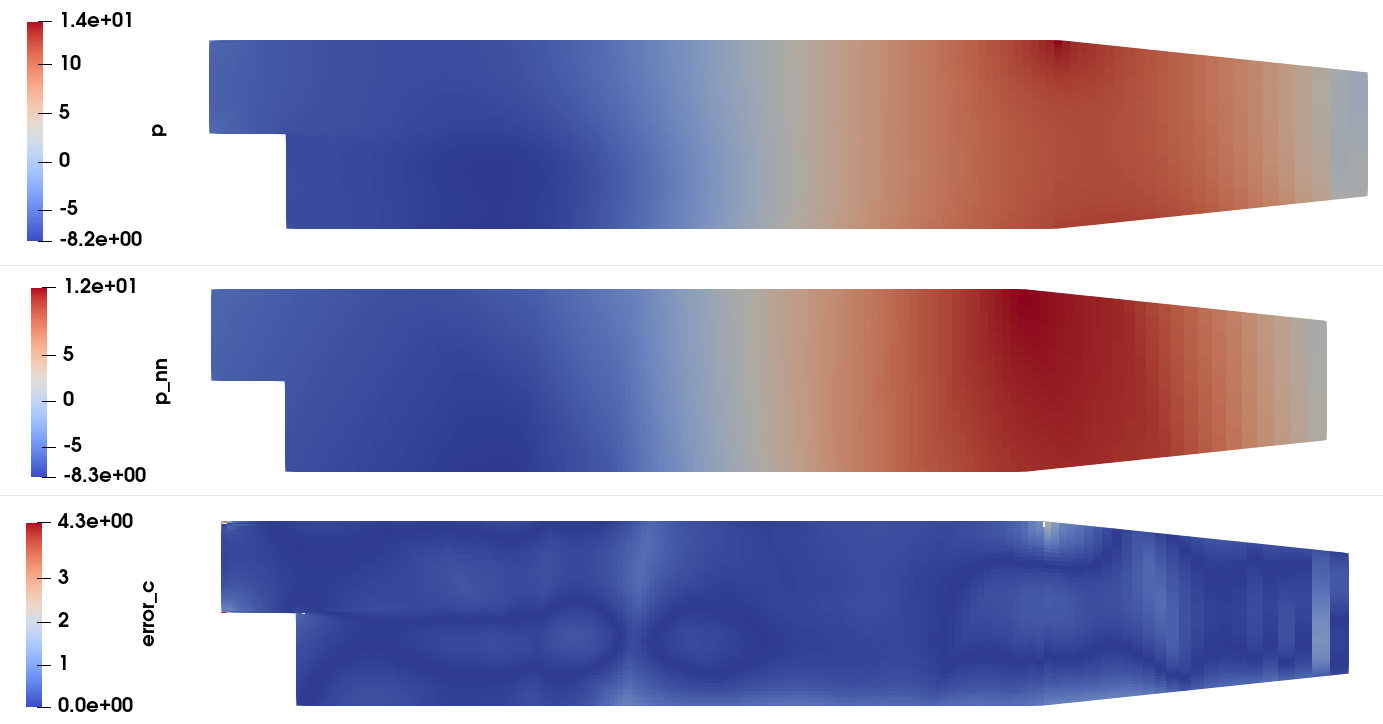

Learning the PitzDaily pressure field

Any OpenFOAM simulation that manipulates a scalar field can be used for training, I’ve used it with the Pitz-Daily steady state turbulent channel flow. The case can be found in

$FOAM_TUTORIALS/incompressible/simpleFoam/pitzDaily

The training ran on the pressure field in the last (converged) time step, using the following NN architecture and epsilon

?> aiFoamLearn -field p -hiddenLayers '(128 64 32)' -epsilon 0.01

The cell-centered error field contains absolute error values, and we can see the maximal value is quite high in some cells. To reduce the error, the alchemy of hyper-parameter tuning can be applied, figuring out a better NN architecture, activation function and optimizer step.